Portfolio item number 1

Short description of portfolio item number 1

Short description of portfolio item number 1

Published in CVPR 2020 [Paper] [Code]

Citation: Giannis Daras, Augustus Odena, Han Zhang, Alexandros G. Dimakis, "Your Local GAN: Designing Two Dimensional Local Attention Mechanisms for Generative Models" , CVPR 2020

Published in NeurIPS 2020 [Paper] [Code]

Citation: Giannis Daras, Nikita Kitaev, Augustus Odena, Alexandros G. Dimakis, "SMYRF: Efficient Attention using Asymmetric Clustering" , NeurIPS 2020

Published in ICML 2021 [Paper] [Code]

Citation: Giannis Daras , Joseph Dean, Ajil Jalal, Alexandros G. Dimakis, "Intermediate Layer Optimization for Inverse Problems using Deep Generative Models" , ICML 2021

Published in NeurIPS 2021 [Paper] [Code]

Citation: Ajil Jalal, Marius Arvinte, Giannis Daras , Eric Price, Alexandros G. Dimakis, Jonathan I. Tamir, "Robust Compressed Sensing MRI with Deep Generative Priors" , NeurIPS 2021

Preprint [Paper]

Citation: Giannis Daras, Wen-Sheng Chu, Abhishek Kumar, Dmitry Lagun, Alexandros G. Dimakis, "Solving Inverse Problems with NerfGANs"

Published in NeurIPS 2022 Workshop on Score-Based Methods [Paper]

Citation: Giannis Daras (*) , Alexandros G. Dimakis, "Discovering the Hidden Vocabulary of DALLE-2" , NeurIPS 2022 Workshop on Score-Based Methods

Published in NeurIPS 2022 [Paper] [Code]

Citation: Giannis Daras (*) , Negin Raoof (*), Zoi Gkalitsiou, Alexandros G. Dimakis, "Multitasking Models are Robust to Structural Failure: A Neural Model for Bilingual Cognitive Reserve" , NeurIPS 2022

Published in ICML 2022 [Paper] [Code]

Citation: Giannis Daras (*) , Yuval Dagan (*), Alexandros G. Dimakis, Constantinos Daskalakis, "Score-Guided Intermediate Layer Optimization: Fast Langevin Mixing for Inverse Problems" , ICML 2022

Published in TMLR 2023 [Paper]

Citation: Giannis Daras, Mauricio Delbracio, Hossein Talebi, Alexandros G. Dimakis, Peyman Milanfar, "Soft Diffusion: Score Matching for General Corruptions" , TMLR 2023

Published as an Oral in NeurIPS 2022, SBM Workshop [Paper] [Code]

Citation: Giannis Daras, Alexandros G. Dimakis, "Multiresolution Textual Inversion" , NeurIPS 2022, SBM Workshop

Published in NeurIPS 2023 [Paper] [Code]

Citation: Giannis Daras, Yuval Dagan, Alexandros G. Dimakis, Constantinos Daskalakis, "Consistent Diffusion Models: Mitigating Sampling Drift by Learning to be Consistent" , NeurIPS 2023

Published in ICML 2023 [Paper]

Citation: Sitan Chen, Giannis Daras, Alexandros G. Dimakis, "Restoration-Degradation Beyond Linear Diffusions: A Non-Asymptotic Analysis for DDIM-Type Samplers" , ICML 2023

Published as an Oral in NeurIPS 2023 [Paper] [Code]

Citation: Samir Yitzhak Gadre, Gabriel Ilharco, Alex Fang, Jonathan Hayase, Georgios Smyrnis, Thao Nguyen, Ryan Marten, Mitchell Wortsman, Dhruba Ghosh, Jieyu Zhang, Eyal Orgad, Rahim Entezari, Giannis Daras, Sarah Pratt, Vivek Ramanujan, Yonatan Bitton, Kalyani Marathe, Stephen Mussmann, Richard Vencu, Mehdi Cherti, Ranjay Krishna, Pang Wei Koh, Olga Saukh, Alexander Ratner, Shuran Song, Hannaneh Hajishirzi, Ali Farhadi, Romain Beaumont, Sewoong Oh, Alex Dimakis, Jenia Jitsev, Yair Carmon, Vaishaal Shankar, Ludwig Schmidt, "DataComp: In search of the next generation of multimodal datasets" , NeurIPS 2023

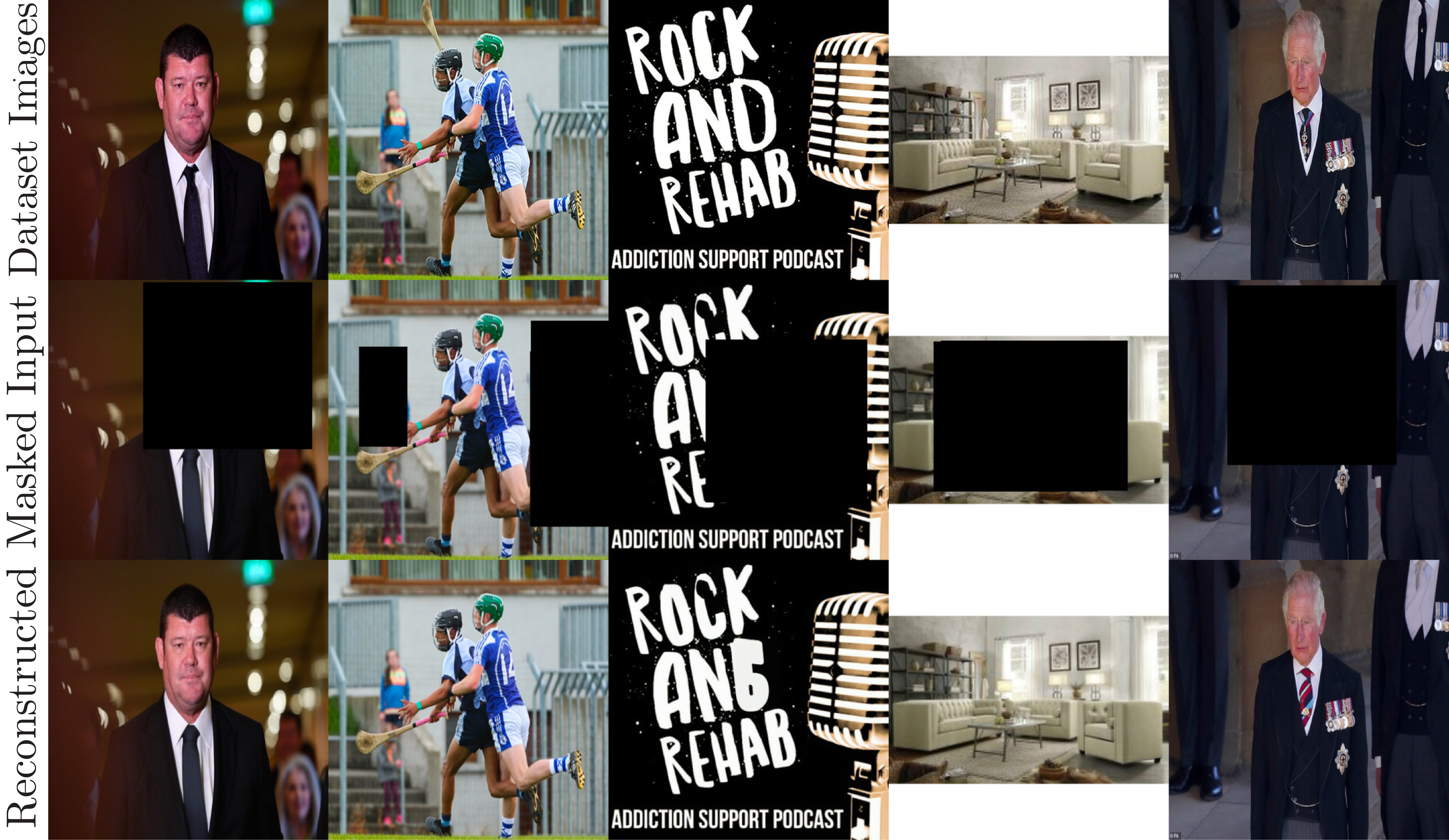

Published in NeurIPS 2023 [Paper] [Code]

Citation: Giannis Daras, Kulin Shah, Yuval Dagan, Aravind Gollakota, Alexandros G. Dimakis, Adam Klivans, "Ambient Diffusion: Learning Clean Distributions from Corrupted Data" , NeurIPS 2023

Published in NeurIPS 2023 [Paper] [Code]

Citation: Litu Rout, Negin Raoof, Giannis Daras, Constantine Caramanis, Alexandros G. Dimakis, Sanjay Shakkottai, "Solving Linear Inverse Problems Provably via Posterior Sampling with Latent Diffusion Models" , NeurIPS 2023

Presented Google Summer of Code 2018 work for the project: “Adding Greek Language to spaCy” under the GFOSS — the Open Technologies Alliance.

In this talk, the latest advancements regarding the application of Artificial Intelligence on the field of Medicine are discussed at a roundtable session for the Athens Crossroad (12th Congress of the Hellenic Society of Thoracic & Cardiovascular Surgeons) conference, under the theme “Artificial Intelligence”. Use cases of Natural Language Processing algorithms, Brain Computer Interfaces and Deep Learning Architectures for Image Processing are outlined.

One disadvantage of using attention layers in a neural network architecture is that the memory and time complexity of the operation is quadratic. This talk tries to address the following question: “Can we design attention layers with lower complexity that are able to discover all dependencies in the input?”. The answer seems to be yes, by modeling the problem of introducing sparsity to the attention layer with Information Flow Graphs.